Beta Phase Learning Sessions

Project overview

Our company was preparing to launch a new product targeting contractors. Our decisions and designs up to this point had been based on early-stage research with potential customers, but due to the fast-paced nature of software delivery, achieving business goals, and trying to stay ahead of the competition, we did not have the luxury of consistently testing new workflows and designs for validation. To attempt to counteract this, our team came up with the idea of combining traditional moderated usability testing with training sessions. We would train our closed-beta clients on how to use the software in its current state, while uncovering opportunities to improve workflows that didn’t connect with their mental models.

Solution summary

Our team implemented a new process with our closed-beta clients called 1:1 Learning Sessions. Instead of attending a regular training session with one of our training managers and learning by watching, our clients would have the opportunity to “learn by doing”, directly with the people who designed the app. The format consisted of prompting the users with tasks, just like a traditional usability test - but instead of simply passing or failing, if a user got stuck, we would show them how to complete the task correctly according to the current implementation of the software. This allowed us to observe whether our designs were intuitive, and if not, ask the users questions that would directly impact how we re-designed it to solve their problems.

Audience

For this stage of our rollout, we were working with three primary personas:

Managers - those who were buying our product for their teams. Managers needed to feel that they were getting their money’s worth. They took a risk on a brand new platform and need to see some of the rewards up front.

Accountants - these were our power users. Making their job easier and more efficient was the key to winning their trust, and the primary problem we set out to solve. They were going to be the person using the program the most, and would be the most likely to have questions and need support along the way, so they were the best resource for us to discover problems and opportunities.

Subcontractor partners - these are our secondary users. Our product is a collaborative software, so diagnosing and fixing any major problems they encounter will clear the way for upstream adoption.

Another way this differed from traditional usability testing is that we were committed to doing these sessions with every closed beta participant, instead of capping it at a specific number, which could be anywhere from 5-8 depending on who you ask (but personally, I’m inclined to agree with NNG.)

Research Goals

Looking back on our previous research, we wanted to ensure that our users could achieve their primary goals:

Track vendor compliance (risk mitigation)

Pay vendors in good standing quickly (core business)

Collect invoices and other documentation (risk mitigation)

Watching our users do this live was the best way to get to the “why” fast. We could dig deeper when a problem occurred, and unearth what the customer was expecting to see in the product and didn’t.

The secondary goal was to validate our existing product roadmap and/or discover unanticipated user needs. It’s hard to adequately evaluate the effectiveness of a program that you aren’t intricately familiar with. As our beta clients continue to increase their level of proficiency with the current version of the app, they could potentially want new functionality that supports areas of their workflow that we weren’t already cognizant of, and we needed to be prepared to listen.

After aligning on responsibilities, we began working on the format of the actual sessions. I took the lead on making a “how-to” document for the Client Success and Product/SME teams so they were aware of the expectations of their role. This was circulated to all teams for feedback to provide everyone with an opportunity to ask questions or voice concerns.

Script writing

During this phase, I regularly collaborated with the Design Lead to establish a series of prompts that would allow us to determine whether the user could complete the tasks necessarily to fulfill their job duties. As with writing traditional usability test scripts, it was important to avoid asking any leading questions. The biggest challenge for this was not using the same verbiage in the prompts that we had already established in the software. For example, on one screen, there was a button that said “Create Vendor.” Asking a user “How would you create a vendor?” wouldn’t give us much insight. Instead, our prompt was “For our first scenario, let's say you've just met a new contractor and want to start working with them. They're called [Name], I've added their details in the Zoom chat. How would you put their information into the app?” We also used this time to create mock data for our clients to use, so they didn’t have to use their real project information for testing purposes.

Collaboration

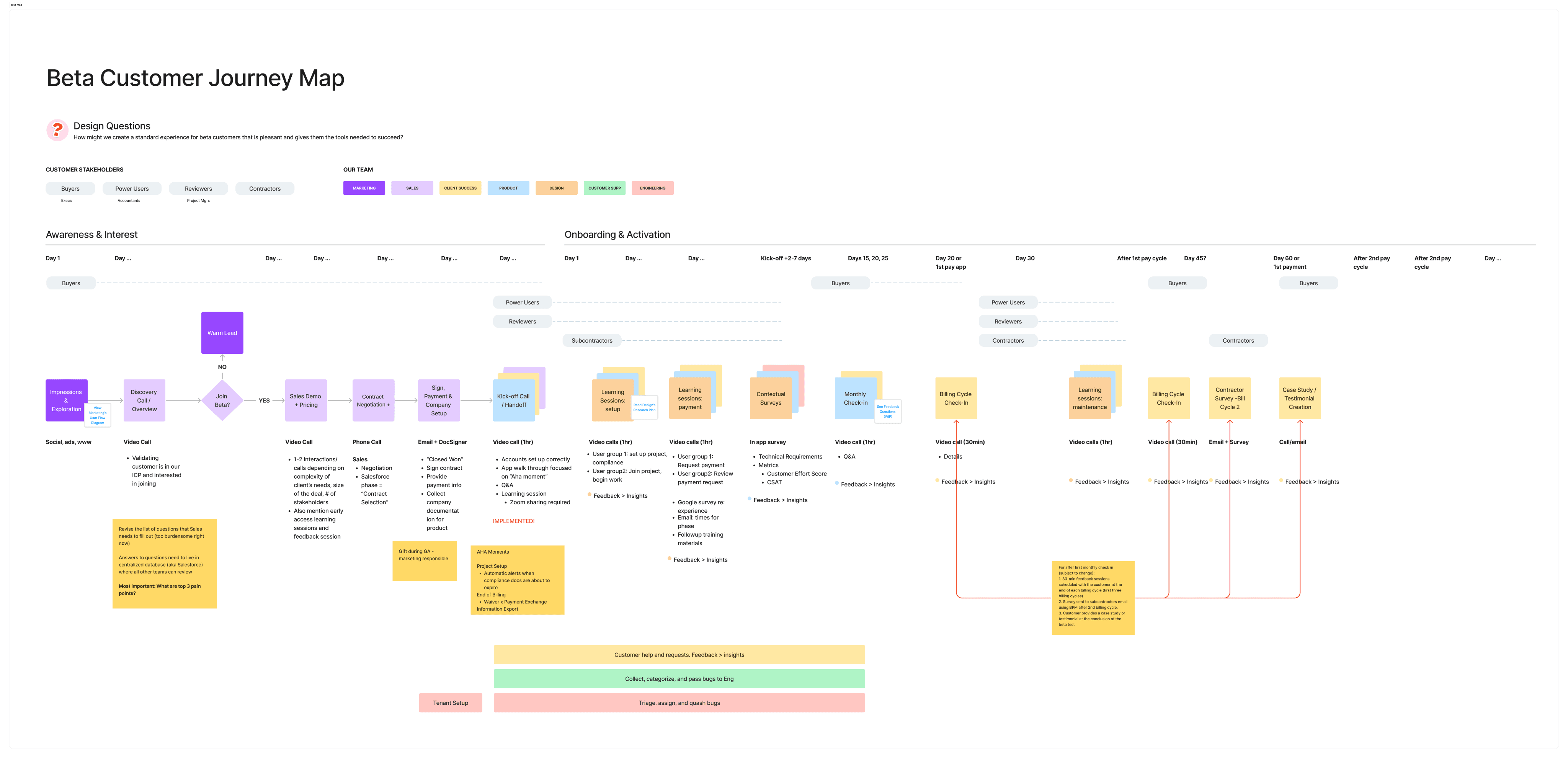

Since this was the first time that a project of this nature had been carried out at the company, the collaboration effort between different departments required intentionality. While we as designers were familiar with working with the Client Success and Training teams in terms of receiving client feedback, we had to be mindful of the established processes that came with onboarding, which was new territory. We brought Marketing, Sales, Client Success, Training, Product, Design, and Engineering together early on in the process to create a timeline for the different phases in which our users would interact with the software, and decide which teams would be responsible for which phases.

After aligning on responsibilities, we began working on the format of the actual sessions. I took the lead on making a “how-to” document for the Client Success and Product/SME teams so they were aware of the expectations of their respective roles. This was circulated to all teams for feedback to provide everyone with an opportunity to ask questions or voice concerns.

The primary objective of this document was to prepare everyone on how to take notes. For the first couple of sessions, the plan was to have all representatives from product, design, and client success on the calls - but we knew that was not sustainable long-term, as everyone had other commitments outside of this project. Eventually, the calls would consist of one product designer, who would always be the moderator; a CSM, who would take notes; and a product manager, who would act as the vendor so we could give clients a full picture of the process from their subcontractor’s perspective. Engineers would also be aware of the schedule so they could be on standby in case issues arise. It was important to ensure that the notetaker knew what to listen for and record. We explained some of the best practices, such as:

Don’t try to transcribe everything - keep notes short and high-level.

Focus on things you haven’t heard before - things that make you think “Oh, that’s interesting”

Capture quotes. This gives us insight into how the user thinks/feels in their own words, and helps us speak the same language as the client.

We also linked out to some external resources for additional information.

Mock Sessions

Once we felt like the script was complete, we ran mock sessions with account executives from a different department. They were familiar enough with the product and personas we were testing, but not so much that they could easily breeze through the test. This allowed to evaluate whether or not our format was easy to follow, predict where troubleshooting might need to occur, and give users better expectations when we sent the calendar invites. It also gave us the ability to practice transitioning from the user to one of our team members who were pretending to be our client’s subcontractors. There was some friction at first with switching between different accounts, but after a couple of these tests, we smoothed it out.

Scheduling clients

After implementing the feedback we got during our mock tests and troubleshooting with engineers where necessary, we were able to start scheduling sessions with clients. We outlined what technical requirements they would need to meet in order to participate: desktop computer or laptop, internet access, web browser. What to expect in terms of time: 5 min intros, 30-45 min for actual session, q&a / overall feedback remaining time.

The overview of these sessions were explained to clients by our sales & CSM partners during the kickoff call. To reiterate expectations, we included a short description in the calendar invites, which came from the client’s CSMs:

Our colleagues will prompt you with a few scenarios to help you complete real-life tasks. As you go through each task, you’ll be able to talk through what you’re thinking and they’ll answer questions.

We also advised them to test their login credentials ahead of the session to make sure there were no issues and to contact their CSM if there were.

Synthesis

We set up a Google spreadsheet to keep track of all of the participants and their responses to each task. In addition to these notes, we created a separate page to log the patterns that we began noticing after the first 2-3 tests. We broke these findings down into usability vs. feature request. For everything tagged as a usability issue, we broke those down further and rated them on a scale of 1-Pebble > 2-Rock > 3-Boulder. This was a take on the product framework where small problems are considered pebbles, and large blockers are considered boulders. For our use case, pebbles were anything that the user found to be an annoyance, but didn’t prohibit them from accomplishing the task, boulders were complete task blockers, and rocks fell in the middle (prob a better way to explain this). We began meeting with the product manager on a bi-weekly basis to prioritize the work and get it on the roadmap.

Iteration

The first opportunity we had for a design iteration was after realizing that all users (6 at this point) had failed the task of inviting their vendors to a project. This was considered a boulder, as one of the main value propositions of our platform was the collaboration with other parties. Since the user’s confusion stemmed from unclear directions on how to invite the vendor, we implemented a quick fix that involved removing the ‘invite vendor’ button until all of the prerequisites were met.

Even with the new iteration, only 1 out of 13 users completed the task. This made it clear that we would need to re-think the entire workflow for our future clients.

Bright’s Creations

$15,000.00

Start Date: Apr 11, 2023

Invite contractor to project

angle-right

Contractor Details

Billing Setup

money-check-dollar

Add budget line items

VENDORNAME will submit their schedule of values against each line item

+

Add line items

arrow-left

Contract #

Not Invited

link

Acme Corporation

|

123 Main Street

Billing

FL

Budget items must be added before inviting contactor

Users found this error message confusing - it didn’t fully explain what they needed to do to correctly invite their contractor

Having this button enabled made users think they could immediately interact with it, when there was actually a prior step required

V1

Bright’s Creations

$15,000.00

Start Date: Apr 11, 2023

angle-right

Contractor Details

circle-exclamation

Billing Setup

money-check-dollar

Let’s get started by adding line items

CONTRACTORNAME will bill against these items when invoicing.

+

Add line items

arrow-left

Contract #

Not Invited

link

Acme Corporation

|

123 Main Street

Billing

FL

We added an alert icon to draw attention to the item that needs action.

We also tried to make the CTA language more inline with the verbiage that our clients use.

We started by removing the ‘invite contractor’ button on the initial screen, so there would be no opportunity to raise that error.

Bright’s Creations

$15,000.00

Start Date: Apr 11, 2023

Invite contractor to project

angle-right

Contractor Details

Billing Setup

Item name

Item name

Item name

Item name

Description

Description

Description

Description

Description

Description

Description

Description

Description

Description

$10,000.00

$5,000.00

$5,000.00

$5,000.00

$5,000.00

$5,000.00

$5,000.00

Contractor successfully invited to project

arrow-left

Contract #

Not Invited

link

Acme Corporation

|

123 Main Street

Billing

FL

Once the line items were added, the ‘invite’ button appears, and can be clicked on to successfully send the email invite.

V2